Our group is mainly working on the fields of Natural Language Processing, Multimodal Learning, and Machine Learning. We are particularly interested in building efficient models and benchmarks that can encourage machines to perform human-level intelligence. We are grateful to NSF, DARPA, IARPA, U.S. Air Force, Amazon (AWS and Alexa AI), Meta AI, Google, Intuit and Washington Post for supporting our research!

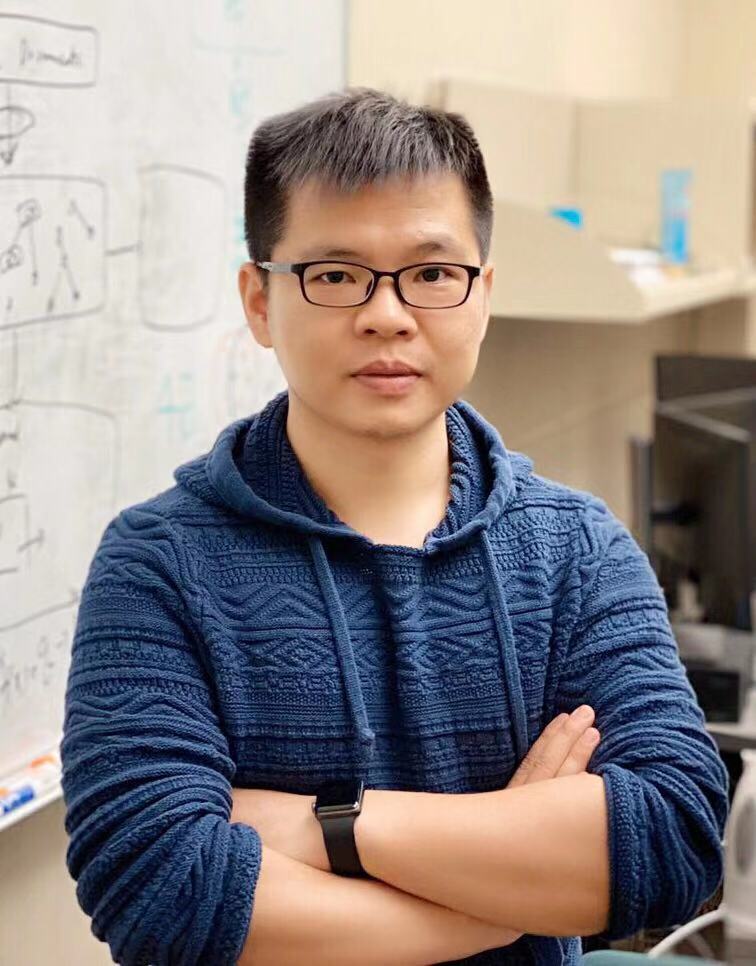

Ph.D. Students

- Zhiyang Xu: Multimodal Foundation Models, (Fall 2021)

- Minqian Liu: Continual Learning, Generative AI and Evaluation, (Fall 2021)

- Ying Shen: Multimodal Learning, Parameter Efficient Tuning, (Fall 2021)

- Jingyuan Qi: Knowledge-Enhanced Learning, Reasoning and Generation with LLMs, (Fall 2022)

- Menglong (Barry) Yao: Multimodal Knowledge Extraction and Verification, Curriculum Learning, (Fall 2022)

- Zihao Lin: Knowledge Editing of LLMs, Retrieval-Augmented LLMs, (Fall 2023)

- Mohammad Beigi: Interpretation of LLMs, Uncertainty Estimation and Calibration for LLMs, (Fall 2023)

MS Students

- Tong Zhou: Dual Learning for Information Extraction, Multimodal Learning

Visiting Students

- Haibo Wang (MS from Fudan University): Fine-grained Video Understanding and Generation

- Yuexi Shen (MS from University of California, Santa Barbara): Evaluation for Multimodal Generation

Alumi

- Sijia Wang (PhD, 2020-2024), now a Research Scientist at Amazon AWS AI

- Yue Zhang (PhD from MSU, 2024)

- Zoe Zheng (MS from VT, co-advised with Chris Thomas, 2022-2024)

- Xiaochu Li (MS from VT, 2021-2023)

- Trevor Ashby (MS from VT, 2023-2024)

- Sai Gurrapu (MS from VT, co-advised with Feras Batarseh, 2022)

- Pei Wang (MS from VT, co-advised with Jin-Hee Cho, 2021-2022)

- Zaid Al Nouman (undergrad from VT, 2022)

- Zijian Jin (visiting MS from New York University, 2022)

- Moksh Shukla (visiting undergrad from IIT Kanpur, 2022)

- Sidhant Chandak (visiting undergrad from IIT Kanpur, 2022)

- Barry Menglong Yao (visiting MS from University of Buffalo, 2022)

- Mingchen Li (visiting MS from Georgia State University, 2022)

- Pritika Ramu (visiting undergrad from BITS Pilani, 2022-2023)

Davis, CA, US

Davis, CA, US

Google Scholar

Google Scholar  Twitter

Twitter